2 Risk Analysis fundamentals in software testing

This chapter provides a high level overview of risk analysis fundamentals and is only intended to be a basic introduction to the topic. Each of the activities described in this chapter are expanded upon as part of the included case study.

According to Webster's New World Dictionary, risk is "the chance of injury, damage or loss; dangerous chance; hazard".

The objective of Risk Analysis is to identify potential problems that could affect the cost or outcome of the project.

The objective of risk assessment is to take control over the potential problems before the problems control you, and remember: "prevention is always better than the cure".

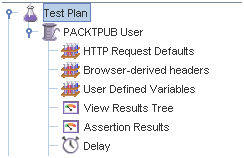

The following figure shows the activities involved in risk analysis. Each activity will be further discussed below.

Figure 1: Risk analysis activity model. This model is taken from Karolak's book "Software Engineering Risk Management", 1996 [6] with some additions made (the oval boxes) to show how this activity model fits in with the test process.

1.1 Risk Identification

The activity of identifying risk answers these questions:

· Is there risk to this function or activity?

· How can it be classified?

Risk identification involves collecting information about the project and classifying it to determine the amount of potential risk in the test phase and in production (in the future).

The risk could be related to system complexity (i.e. embedded systems or distributed systems), new technology or methodology involved that could cause problems, limited business knowledge or poor design and code quality.

1.2 Risk Strategy

Risk based strategizing and planning involves the identification and assessment of risks and the development of contingency plans for possible alternative project activity or the mitigation of all risks. These plans are then used to direct the management of risks during the software testing activities. It is therefore possible to define an appropriate level of testing per function based on the risk assessment of the function. This approach also allows for additional testing to be defined for functions that are critical or are identified as high risk as a result of testing (due to poor design, quality, documentation, etc.).

1.3 Risk Assessment

Assessing risks means determining the effects (including costs) of potential risks. Risk assessments involves asking questions such as: Is this a risk or not? How serious is the risk? What are the consequences? What is the likelihood of this risk happening? Decisions are made based on the risk being assessed. The decision(s) may be to mitigate, manage or ignore.

The important things to identify (and quantify) are:

· What indicators can be used to predict the probability of a failure?

The important thing is to identify what is important to the quality of this function. This may include design quality (e.g. how many change requests had to be raised), program size, complexity, programmers skills etc.

· What are the consequences if this particular function fails?

Very often is it impossible to quantify this accurately, but the use of low-medium-high (1-2-3) may be good enough to rank the individual functions.

By combining the consequence and the probability (from risk identification above) it should now be possible to rank the individual functions of a system. The ranking could be done based on "experience" or by empirical calculations. Examples of both are shown in the case study later in this paper.

1.4 Risk Mitigation

The activity of mitigating and avoiding risks is based on information gained from the previous activities of identifying, planning, and assessing risks. Risk mitigation/avoidance activities avoid risks or minimise their impact.

The idea is to use inspection and/or focus testing on the critical functions to minimise the impact a failure in this function will have in production.

1.5 Risk Reporting

Risk reporting is based on information obtained from the previous topics (those of identifying, planning, assessing, and mitigating risks).

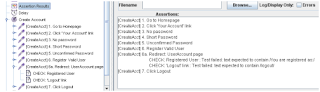

Risk reporting is very often done in a standard graph like the following:

Figure 2: Standard risk reporting - concentrate on those in the upper right corner!

In the test phase it is important to monitor the number of errors found, number of errors per function, classification of errors, number of hours testing per error, number of hours in fixing per errors etc. The test metrics are discussed in detail in the case study later in this paper.

1.6 Risk Prediction

Risk prediction is derived form the previous activities of identifying, planning, assessing, mitigating, and reporting risks. Risk prediction involves forecasting risks using the history and knowledge of previously identified risks.

During test execution it is important to monitor the quality of each individual function (number of errors found), and to add additional testing or even reject the function and send it back to development if the quality is unacceptable. This is an ongoing activity throughout the test phase.